It feels like we’re entering another round of AI search misinformation.

The relationship (both economic and technical) between publishers, platforms, marketers, and consumers continues to evolve at a breakneck pace with things like ChatGPT Apps, Claude Cowork, and agentic commerce, so it’s very true that AI is changing how the web works as a platform.

Charitably, sometimes folks confuse a vision for how AI might work in the future with how AI platforms actually work today. Uncharitably, sometimes folks have a habit of letting confidence outpace evidence.

As with most things, there are no silver bullets in AI search optimization, but it is possible to systematically diagnose and improve how agents consume your content.

Here’s my take on the fundamentals of optimizing your content to be found, indexed, and correctly understood by AI—both in AI search platforms like ChatGPT and Perplexity, and even in coding agents like Claude Code and Codex.

Worth noting: Training data collection is another story. Optimizing the content you make available to AI labs to train future models requires different tactics and a longer-term strategy. Most of the dialogue on AI search I see is about search and retrieval, so that’s the focus here. This also isn't intended to cover PR/media relations strategies in the AI era, which are also rapidly evolving.

Foundational hot takes that should be ice cold

First, the foundation:

1. By default, you should serve HTML to AI agents. HTML is the lingua franca of the web. It’s what AI bots expect to consume in almost all cases.

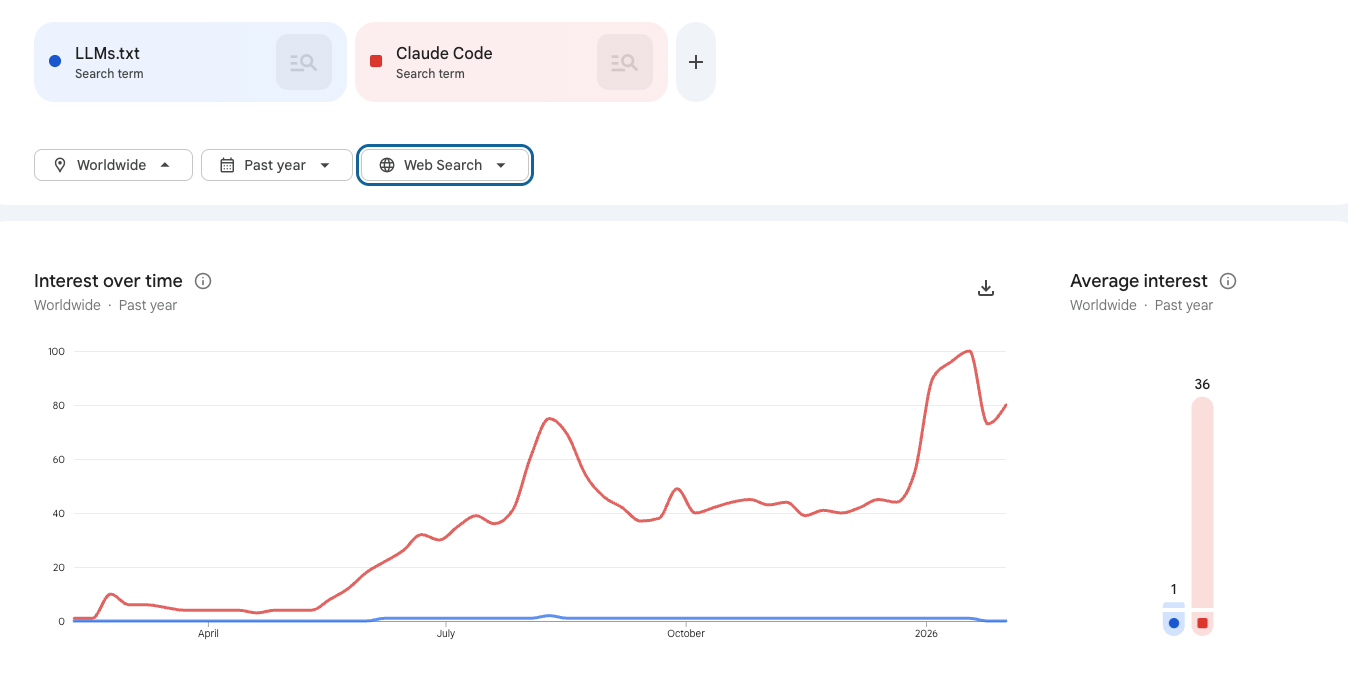

2. Adding Markdown endpoints (page.md) or LLMs.txt to your website is most likely a waste of time. Consumer AI platforms are not deliberately engineered to look for or consume this content.

It is not a standard, and while it’s mostly not harmful, the effort will mostly go unrewarded. Both consumer AI and coding agents look for information via “regular” web search and consume regular HTML from pages they find in search results.

Even coding agents don’t consume LLMs.txt by default. Some programmers will prompt Claude Code to look for them, but this was a niche behavior that’s now in decline.

I have a ton of respect for Jeremy Howard (an actual innovator in AI and the originator of LLMs.txt), but it was never intended as an SEO tool and I don’t think reading a big list of pages on your site as a single Markdown file is the optimal form factor for content discovery with modern reasoning, tool-calling LLMs. Leave LLMs.txt for bespoke RAG agent authors, where it belongs.

3. You want AI agents to consume content from your existing URLs. Those URLs are the ones that get cited back to the underlying human user. If AI is citing AI-specific URLs and humans are clicking through on them, where do those humans go from there? (I’ll give you a hint: not your checkout page.)

Fixing your existing website (is harder than it sounds)

Aside from “HTML is good, actually,” the takeaway here is: Expect most of your content to be found by an AI user agent operating a “regular-ish” search engine on its own terms.

There are no magic protocols that will make AI always choose the content you would prefer (SEO still matters because ranking still matters—it’s just not the only thing that matters).

Serving good HTML—straight from the server—with lots of readable text that is relevant, coherent, and concise at your existing URLs is the most evidence-backed way to improve how AI agents consume your content.

Consider this your agent-friendly HTML checklist:

- Clear structure

- Minimal technical or navigational cruft (e.g., do not serve 9,000 pages of nav and interstitial HTML before your four-paragraph article)

- Text alternatives for rich media

- Completeness (i.e., no missing content that only renders in the browser for humans)

- Relevant schema (remember: Reflect all meaningful schema content in page text—not all agents can see JSON-LD)

- Information density and comprehensiveness (AI agents mostly find single pages in search and even ones that can navigate sites don’t do so as often, so the more helpful any single page is, the more likely AI will use your content to answer the user’s question)

That last bullet isn’t an invitation to keyword stuffing. Don’t do that. Instead, ask yourself, “What other questions would someone reading this have?”

It’s been widely shared at this point, but it’s worth repeating: Unlike Googlebot, most AI agents only consume server-sent (”server-side rendered”) HTML: They won’t see any content on your pages that is rendered via JavaScript.

You don’t have to take my word for it. Look at your raw HTTP logs—you won’t see client-side assets being loaded by ChatGPT-User and friends.

A pragmatic approach: Serve differentiated content to AI at the edge

I’ve given the above advice to hundreds of people in one-on-one conversations over the past two years.

And it's been totally unactionable for most of them because it is remarkably hard for most website and digital experience teams to serve two masters: a complex, interactive, and aesthetic experience for human visitors and a simple, semantic, and information-dense content repository for AI agents.

It’s easy to see the appeal of approaches like LLMs.txt when the alternative is considering yet another website replatform. It’s just unfortunate that it doesn’t work.

AI agents “want” to consume HTML off of your existing URL structure. Good news: It's possible to serve them differentiated, agent-optimal HTML while keeping your human website the same.

The most reliable way to do this is to target known AI user agents and route them to a different experience at the CDN or load balancer.

The web was built with content negotiation in mind and, though the technical mechanism is messier, the principle holds. You should still serve HTML to AI agents—but it can be better HTML, with less technical cruft, more content structure, and denser information.

A control layer for how AI accesses your content

You will be unsurprised to learn that I make a product that helps enterprises manage exactly this challenge: targeting and serving AI agents content that is technically and semantically optimized for them, without disrupting your existing web experiences.

Since launching last summer, we’ve deployed Scrunch Agent Experience Platform (AXP) at very large enterprises in B2B SaaS, ecommerce, and entertainment (and across technology platforms like Akamai, AWS, and Cloudflare) with strong results.

The launch of Adobe LLM Optimizer late last year validates the soundness of this approach and likewise integrates at the CDN/edge layer.

What about cloaking?

The main objection I hear to the idea of “split-pathing” your website is, “What about cloaking?”

Cloaking is one of the classic pillars of Google’s anti-spam policies, which prohibits serving different content to Googlebot versus humans with deceptive intent (so, abundance of caution, don’t serve differentiated content to Googlebot).

In a nutshell, cloaking is “deceiving” the search engine into giving you unearned ranking, and thus clicks, or alternatively deceiving a human into clicking on your link under false pretenses.

But no AI-native platform has a cloaking policy today. Because the entire premise of cloaking doesn’t make sense in the AI search world.

In AI search, AI content retrievers consume page content and then directly answer a human user’s question based on the exact content they consumed. There is no deception here—what you serve to the AI bot is what goes into the LLM’s context window and ultimately what influences the experience the human gets.

The citation is a reference, but not the main point. And as we all know, humans don’t click on the majority of the citations in AI responses—“zero-click search,” remember?— but when they do, it’s to take action, not to read your webpage.

Fundamentally, in a zero-click world, there is much less opportunity for—or benefit from—the kind of bot versus human deception anti-cloaking policies are designed to prohibit.

The myth of “one experience”

Interestingly, in some ways the discourse about cloaking on modern websites is already a bit naive.

Modern, sophisticated web experiences are a tangle of feature flags, A/B tests, personalization platforms, and more—delivered at the edge, from the server, or via JavaScript SDKs that “patch” page content on the client side.

When we run technical discovery processes, I frequently ask questions like:

- What is the authoritative version of the page?

- What are you serving to Googlebot, or to any automated user agent that isn’t passing cookies, browsing with a persistent session, or being targeted by your feature flags?

Surprisingly, I’ve found that almost no one knows—there are too many different technologies, experiments, and departments with their hands on the tiller for any one person to say, “This is exactly what we’re serving.”

The reality is that the experience that any visitor (human or AI) gets on enterprise websites has increasingly become a stochastic process: You know what visitors see on average, but it's difficult to know exactly what experience any particular visitor will have.

Before AI search, this kind of variation in experience was mostly a wash. Your page still had most of the same content. If you’re serving variation A or variation Z, they’re probably about as relevant to the same search terms.

But with AI search, again, the exact content you’re serving to the bot is what the LLM consumes and uses to formulate answers.

If you inadvertently start serving ChatGPT-User the variant of your page personalized for millennial bluegrass enthusiasts, everybody who encounters your content in ChatGPT is hearing banjos.

Assessing where you stand

Before you jump on any AI search tactic, I suggest you validate it with real, first-party evidence and approach it with intentionality:

- Look at how AI agents are accessing your content today. You can’t do this in Google Analytics—you need to look at low-level HTTP access logs. Are they consuming pages that don’t make intuitive sense, like your terms of service or blank “search” pages? Are core content pages never consumed? These often indicate technical problems with AI accessibility.

- Ask questions about your content in major AI agents and don’t just look at whether you show up. Is the message of your content actually coming through to the user? Do the citations go to pages that make sense? Although marketers often focus on unbranded queries, starting with a hard look at the results of branded queries removes the variability of competitive ranking and can show you where you’re committing “own goals” with poor content compatibility.

- Think about the experience of AI agents and humans separately. AI user agents generally want to locate answers in as few steps as possible. Humans will navigate and browse. If you don’t split-path, at least consider adding tests for these agent ICPs to your testing criteria. Simply curling the content and feeding it into an LLM with questions can be a good start.

Traditional search indexing helps people find and experience your content. With AI search, AI agents consume your content and AI becomes the experience.

But you can and should exercise control over how your content is presented to agents.

Be intentional, look at your data, test behaviors, and iterate.